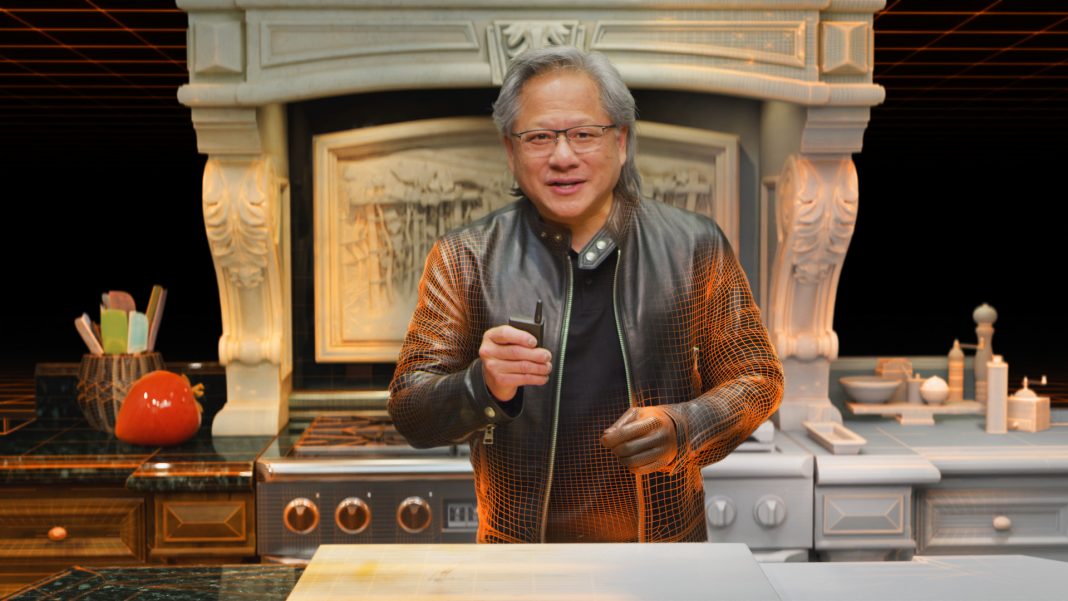

Nvidia’s last big talk was given in part by a virtual version of Jensen Huang, the company’s CEO. A publicity stunt for Nvidia gadgets that required a lot of technology investment.

Nvidia has just given a new meaning to the default keyword. Lors de la présentation de son Omniverse en avril 2021, l’entreprise spécialisée dans l’intelligence artificielle et la conception de cartes graphiques a trompé le public en faisant animer une partie de la conférence par un clone numérique de lePD Jensen Huang, ‘company.

Over the course of about two hours of the conference, 14 seconds of Jensen Huang’s default avatar submittedAlmost without anyone noticing. These few seconds may seem small compared to the total duration of the conference, but it takes a lot of work.

A clone created by AI

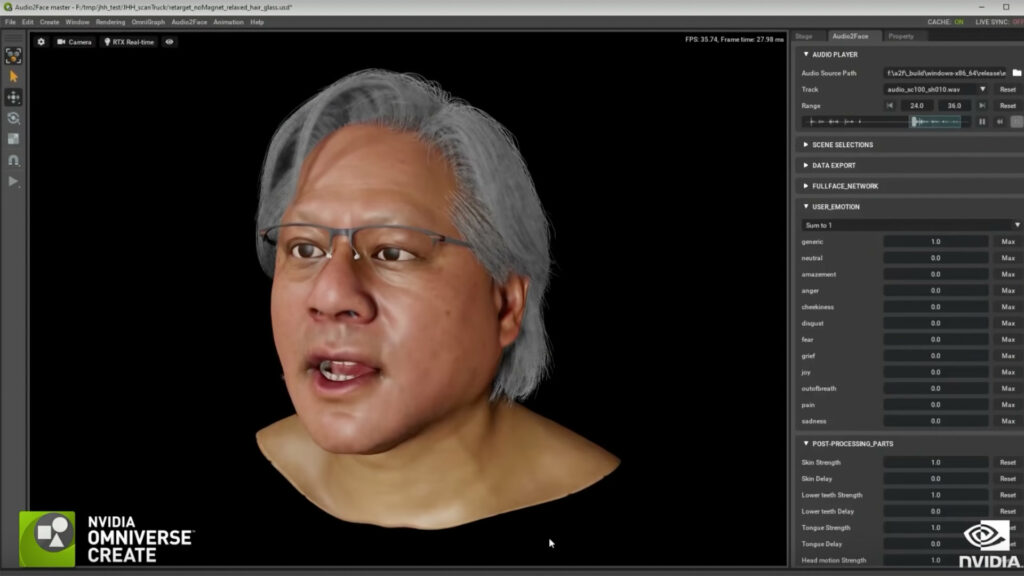

First, the Nvidia teams created a 3D model of Jensen Huang by filming him from all angles using a large number of cameras. Then, an actor in a motion-capture suit re-read the CEO’s old letters, mimicking his movements. This allowed Nvidia to create 21 3D modeling models that were all analyzed to create a compelling virtual skeleton. An algorithm has also been trained to replicate the CEO’s facial expressions to add a layer of realism.

Then a program called Audio2Face was tasked with moving the face of the 3D clone to match the text that the real Jensen Huang had read. Another program called Audio2Gestures allowed Nvidia to move the arms and body of a virtual mannequin according to what was read. By combining these two technologies, 3D cloning was able to express itself physically as a real human would.

The result of this experiment is visible At about 1:02:42 on Nvidia’s show video. And if the virtual supermodel is a bit stiff, the illusion still works well.

Towards more disguised deepfakes?

A Jensen virtualization engineer explains In a documentary dedicated to the making of this conference that ” Unlike creating a virtual character in a movie, where it is possible to collect a lot of data, here we have very little content in the beginning “.So the challenge was to create a convincing duplicate of Huang’s 3D scan and not anything else. Moving the mouth and synchronizing the movements with the text” It’s all done thanks to artificial intelligence Nvidia says.

Thus the exploit here is not to create a convincing virtual human, video games have been doing this for a few years very efficiently, but instead to create a virtual multiplication from a very small data set. ” Our challenge was to create tools to create virtual humans more easily. ”, explains the engineer in charge of the project. Therefore, creating a virtual Jensen Huang is not a simple publicity stunt: Nvidia’s algorithms can facilitate the creation of 3D characters, whether for video games or cinema.

One can imagine that by analyzing the characters of the film and their way of speaking and moving, it will then become possible to create compelling pairings. Perhaps more compelling than what we’ve seen in recent Star Wars movies or series.

Share on social media

Continuation of the video

“Wannabe internet buff. Future teen idol. Hardcore zombie guru. Gamer. Avid creator. Entrepreneur. Bacon ninja.”